Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Quick Links

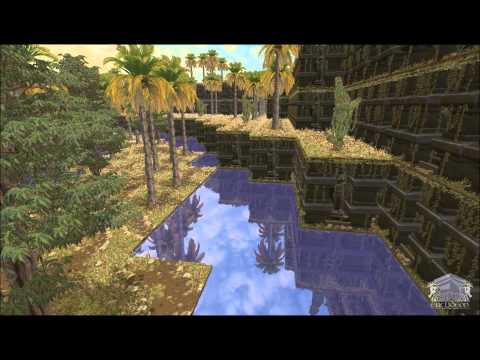

Major Graphic change coming to the industry

Quotations Those Who make peaceful resolutions impossible, make violent resolutions inevitable. John F. Kennedy

Life... is the shit that happens while you wait for moments that never come - Lester Freeman

Lie to no one. If there 's somebody close to you, you'll ruin it with a lie. If they're a stranger, who the fuck are they you gotta lie to them? - Willy Nelson

Comments

I couldve sworn I saw this a couple of months ago o.o

''/\/\'' Posted using Iphone bunni

( o.o)

(")(")

**This bunny was cloned from bunnies belonging to Gobla and is part of the Quizzical Fanclub and the The Marvelously Meowhead Fan Club**

Color me skeptical. It sounds like they've rediscovered voxels, and nothing more.

In the early says of 3D graphics, there were a variety of methods attempted, such as voxel ray casting, quadratic surfaces, and ray tracing. The reason the industry settled on rasterization is that rasterization is fast. There are some impressive effects that you can get with some other graphical methods (e.g., much better reflections via ray tracing) that aren't available for rasterization, but that's no good for commercial games if you're stuck at two frames per second.

If you want a technology to be viable for commercial games, then it needs to be able to deliver 60 frames per second while looking pretty nice on hardware that isn't unduly expensive. To make a seven minute demo video, you don't need to be able to hit 60 frames per second. You can do one frame per minute and leave a computer running for a week to make your video.

In order to prove that the technology is real, you have to let members of the tech media test it out in real time. Let someone who is skeptical have the controls, and move forward and back, turn left and right, and rotate the camera in real time. Prove that you can render it in real time, rather than having a ton of stuff preprocessed. If the technology works properly, then it shouldn't be that hard to do this. So why haven't they?

Above, I said that the reason why other graphical methods didn't catch on is that they were too slow. As time passes, graphics hardware gets faster, so one might think that once it gets fast enough, another method will be more efficient than rasterization.

But what about situations where the hardware available is dramatically faster today? For example, Pixar spends hours to render each frame of their movies, which means that they can take a hundred thousand times as long to render each frame as is necessary for a commercial game to run smoothly. And then they can do that on some seriously powerful graphical hardware, too, as spending a million dollars on hardware to render a movie would be a relatively minor expense for them. And they still use rasterization.

There is at least partially inertia. Right now, video cards from both AMD and Nvidia are very heavily optimized for rasterization. They really aren't optimized for other graphical methods, though they are becoming better at doing more general workloads. But if some other method were vastly better than rasterization with that sort of large workloads, it would still be worth it for Pixar to use that other method, even if it means taking several times as long to render each frame as it would for better optimized hardware.

Even if some other method were to be better, it would take a long time for the industry to transition. It takes about three years from the time that AMD or Nvidia start working on a new architecture to the time that the first new cards come to market. If everyone were to suddenly realize that Euclideon's method was far better than rasterization and games were to switch to use it, all graphical hardware released within the next three years wouldn't be able to run such games properly.

Furthermore, even once such hardware is out, you don't want to target a game at hardware that hardly anyone has yet. It would probably be five or six years before games could safely use the technology and have a decent potential playerbase available, and even then, needing to have a card that released in the last few years would lock out a large fraction of their potential playerbase.

It'd be helpful if they posted an information dump as of the video, min/max/average FPS, projected FPS with a hardware implementation, the size of the elephant and other assets in the demo hdd-wise...

However, he stated that the demo was running (See: 7:00 and I'm assuming he means in realtime) at 20FPS (with superior versions in the works) based solely on a software solution, which is huge. I hope they have some in-house hardware guys for their next demo.

fakity fake fake.

and fake.

LOL

They're called voxels http://en.wikipedia.org/wiki/Voxel

Many games have used them in the past.

It has the benefit of detail, but it has negatives too.

*hardware currently isn't streamlined to use voxels, but verteces

*you need huge amounts of memory

*you need very specific animation methods

*you need to calculate lighting on a per-point basis, which is ridiculously expensive, so you end up calculating on a per vertex basis anyway

Saw a similar video well over a year ago. If something like this is gonna change its gonna be a long ways off.

Make games you want to play.

http://www.youtube.com/user/RavikAztar

if you watch the video they say upfront the originally debut last year.

Quotations Those Who make peaceful resolutions impossible, make violent resolutions inevitable. John F. Kennedy

Life... is the shit that happens while you wait for moments that never come - Lester Freeman

Lie to no one. If there 's somebody close to you, you'll ruin it with a lie. If they're a stranger, who the fuck are they you gotta lie to them? - Willy Nelson

No less than a decade before this becomes viable.

That video had a very small set of very noticably tiled-assets. It's also not moving, they even mentioned there are no animations in yet.

There's no "game" applied to this. Even if its crude and a pre-scripted event, most other game-centric engine tech usually shows something that can sell the ideas of the games that can be made with the tech.

This is definitely an interesting take on where to advance the technology, but there are still WAY to many unknowns for this to be close to viable.

Can the tech do terrain deformation

What fidelity of physics can it interact with

Just how many unique assets can it load before buckling

What kind of file-size will it be creating

And those are just tech-side. That doesn't even scratch personel issues. If you're laser-scanning in half your foliage, that means the rest of the world needs to be built to that fidelity. What artists really want to be relegated to modelling out detailed bark patterns, when for the in-game value of the asset, a fairly well made trunk with a well texture-mapped effect would offer the same effect.

This isn't to say it'll never be viable, but they're going to have a lot longer road than just a year before their concepts become practical.

Trying to switch development from a polygon-based world to their tech will be like trying to hard-shift programmers from C-derivatives to some completely new language. Sure it may be functional and people will experiment with it, but it's gotta move mountains with ease to really get the masses to look up from what they know and shift over.

Lets Push Things Forward

I knew I would live to design games at age 7, issue 5 of Nintendo Power.

Support games with subs when you believe in their potential, even in spite of their flaws.

They actually said that they have animation in the engine or w/e if you check their website and that their sdk will be available in about a year maybe.

Oh wells o.o Time will tell~

Anyways as for voxels...IIRC doesnt crysis engine use voxels in terrain?

''/\/\'' Posted using Iphone bunni

( o.o)

(")(")

**This bunny was cloned from bunnies belonging to Gobla and is part of the Quizzical Fanclub and the The Marvelously Meowhead Fan Club**

I remember this when it was first announced. It had something to do with using algorythms or some such to dictate where things were. Or some such thing.

It's interesting that these guys are still plugging away.

Godfred's Tomb Trailer: https://youtu.be/-nsXGddj_4w

Original Skyrim: https://www.nexusmods.com/skyrim/mods/109547

Serph toze kindly has started a walk-through. https://youtu.be/UIelCK-lldo

Changing technology like this is really hard.

It is kinda like when the video industry tried to changed to Beta max. VHS were already too well established and since a Beta max couldn't play VHS cassettes it failed.

In this case will first both Nvidia and ATI have to max out their GFX cards for both the regular rendering and for this. Then we still have to wait until enough people to buy those cards for it to be commercial to release a game with the technology.

Of course things will eventually change when computers becomes better and better. Maybe making games will be closer to making movies in the future and the companies might scan buildings and forests to make a game look completely real.

They can't do that right now because we just don't have enough memory. That will change, harddrives will soon be the same thing as ram, one huge memory that the computer always have fast access to but until that actually happens we will be stuck with the traditional graphics.

This might be the technology of the future, or not. Really hard to tell until we actually see how the computers will be then.

It does, but if you look at all those timelines, it's taken them about a decade to make that tech practical for development. And even still, CryTek isn't known for making engines that go easy on computers.

Lets Push Things Forward

I knew I would live to design games at age 7, issue 5 of Nintendo Power.

Support games with subs when you believe in their potential, even in spite of their flaws.

LoL well when I read the title I heard Patrick Stewart do the opening to the X-Men films about evolution. Yeah graphics do improve..almost every year.

Jymm Byuu

Playing : Blood Bowl. Waiting for 2. Holding breath for Archeage and EQN.

Imagine the WoW clones they could make with this

Although the tech is pretty incredible, it isn't new - the rendering engine is what's new here. Polygons aren't as "lame" as these guys make them out to be. Major movie cinematics and absurdly realistic 3d objects are still made with polygons and deformation meshes. Go to pixologic.com and look at some of their stuff. Zbrush's deformation maps will be mainstream before this. Granted, films are rasterized frames that are superimposed over the action generally, and undergo a lot of filtering and clean up, but the rendering capability is there - and one day it will be available in real time - not in the hours/days per frame that it currently takes.

PCs will be rendering in polys for a long time... at LEAST until they catch up with major motionpicture quality graphics - (the ability to render millions of polys in real time; don't misunderstand that - I know movie frames can take hours or days - I'm saying eventually) because that's the technology they are built on. Companies like nVidia aren't going to scrap their entire framework in anticipation of this any time soon.

And I'm sorry, with millions of polys... shit gets fkin' real.... as real as this demo.

Games will become like motion pictures and cinematics. Movies will go to voxels. Years and years later, games will go to voxels.

It's awesome to see that this framework is being realized, though.

the voice in the video is soooo annoying...

It may not be viable right now, but this is the future and anyone who disagrees need only be referred to Moore's Law. Look at games 10 years ago and compare them to today's best graphics. Imagine that growth exponentially over the next 10 years.

Looks cool but Im more intrigued by physics engines and collision detection.

Actually Betamax came 1st (1975) and VHS later (1976). The problem was, while Betamax had better playback quality, VHS tapes had longer recording time. In addition, VHS worked better in the early camcorders than betamax format. Coupled with bad marketing moves by Sony and cut-throat price cutting by suppliers of VHS units, Betamax lost an increasing amount of market share until it became obsolete.

Sorry for the off-topic.

A man is his own easiest dupe, for what he wishes to be true he generally believes to be true...

Are the methods so different that the GPU architecture would need to change? Or is it an issue that can be resolved at the driver level? It's a very different timeline if it can be resolved by a driver, but I don't know whether or not that is possible.

Regarding PIXAR, RenderMan supports many rendering modes Voxels included.

That's another thing. Poly count isn't the only thing that make graphics look real. It's seriously more about physics and lighting in 90% of scenarios. And besides, like I said im my post above... the hate on polys in unnecessary... you can get CRAZY realistic with polygons. It's been proven time and time again - I guarantee you I can model something just as realistic as that stuff if I had an unlimited poly count and some deformation maps - hell even a few million to work with. And it's much more likely since polys are what all existing tech is built around.

And I can make a scene in Zbrush or in Max with Zbrush def. maps with millions upon millions of polys - and rotate and spin just like that demo. It's when you add lighting, physics, and actually render the stuff that sucks the power.

Since this method would obviously take up an insane amount of memory for a demo, let alone a fukk on game, they should probly look into futhering the developement of hvds discs for output. But it still looks cool, but the physics are what I'm interested in also.

yah along with bio-chips, 3d memory, large implimentation of VRML, and 3D-OS. been waiting for them for over 10 years and still not seeing them being implimented anytime soon:D heck i'd be happy if they finally come out with a practical VR power glove:D

Seen this one before. Snake oil.

One day will have the technology to do virtually what our ancestors did in reality.